Dalton’s Atomic Theory

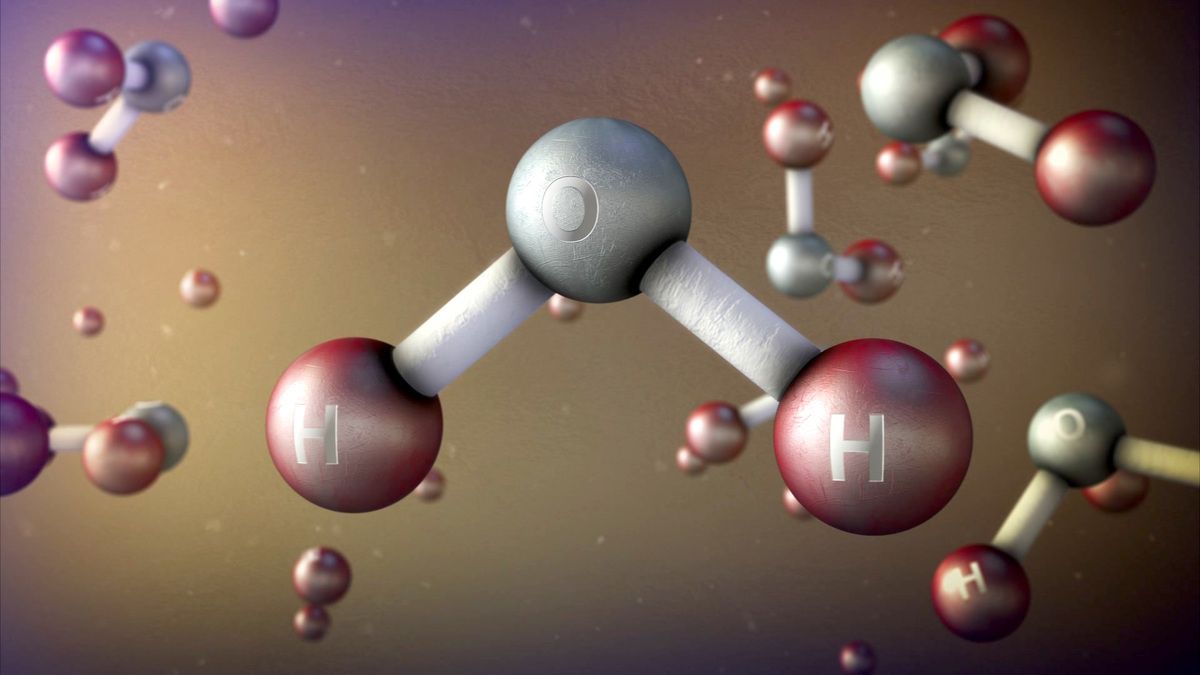

We all know that all matter in the universe is made up of microscopically small particles called atoms. The word atom comes from the Greek word άτομο, which means indivisible. Many ancient philosophers (like Kanada from India & Democritus from Greece) had a vague qualitative idea of the ultimate constituents of matter. Their views are … Read more